Evaluations and benchmarks

Driving is a safety-critical multi-agent application, making careful evaluation and risk assessment essential. Mistakes in the real world are costly, so simulations are used to catch errors before deployment. To support rapid iteration, evaluations should ideally run efficiently. This is why we also paid attention to optimizing the speed of the evaluations. This page contains an overview of the available benchmarks and evals.

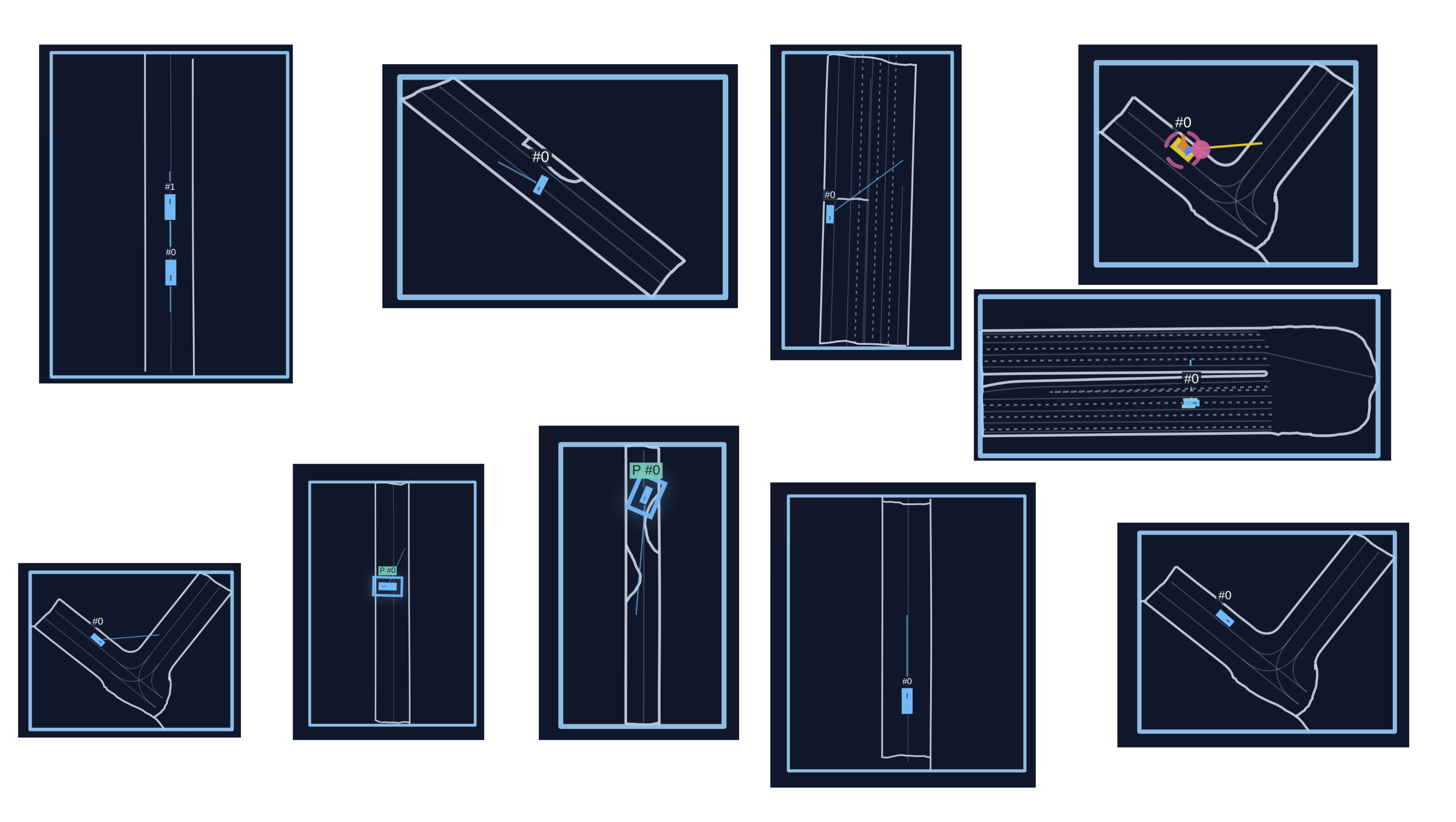

Sanity maps 🐛

Quickly test the training on curated, lightweight scenarios without downloading the full dataset. Each sanity map tests a specific behavior.

puffer sanity puffer_drive --wandb --wandb-name sanity-demo --sanity-maps forward_goal_in_front s_curve

Or run them all at once:

puffer sanity puffer_drive --wandb --wandb-name sanity-all

- Tip: turn learning-rate annealing off for these short runs (

--train.anneal_lr False) to keep the sanity checks from decaying the optimizer mid-run.

Available maps:

forward_goal_in_front: Straight approach to a goal in view.reverse_goal_behind: Backward start with a behind-the-ego goal.two_agent_forward_goal_in_front: Two agents advancing to forward goals.two_agent_reverse_goal_behind: Two agents reversing to rear goals.simple_turn: Single, gentle turn to a nearby goal.s_curve: S-shaped path with alternating curvature.u_turn: U-shaped turn to a goal behind the start.one_or_two_point_turn: Tight turn requiring a small reversal.three_or_four_point_turn: Even tighter turn needing multiple reversals.goal_out_of_sight: Goal starts without direct path; needs some planning.

Distributional realism benchmark 📊

We provide a PufferDrive implementation of the Waymo Open Sim Agents Challenge (WOSAC) for fast, easy evaluation of how well your trained agent matches distributional properties of human behavior.

puffer eval puffer_drive --eval.wosac-realism-eval True

Add --load-model-path <path_to_checkpoint>.pt to score a trained policy, instead of a random baseline.

See the WOSAC benchmark page for the metric pipeline and all the details.

Human-compatibility benchmark 🤝

You may be interested in how compatible your agent is with human partners. For this purpose, we support an eval where your policy only controls the self-driving car (SDC). The rest of the agents in the scene are stepped using the logs. While it is not a perfect eval since the human partners here are static, it will still give you a sense of how closely aligned your agent’s behavior is to how people drive. You can run it like this:

puffer eval puffer_drive --eval.human-replay-eval True --load-model-path <path_to_checkpoint>.pt

During this evaluation the self-driving car (SDC) is controlled by your policy while other agents replay log trajectories.